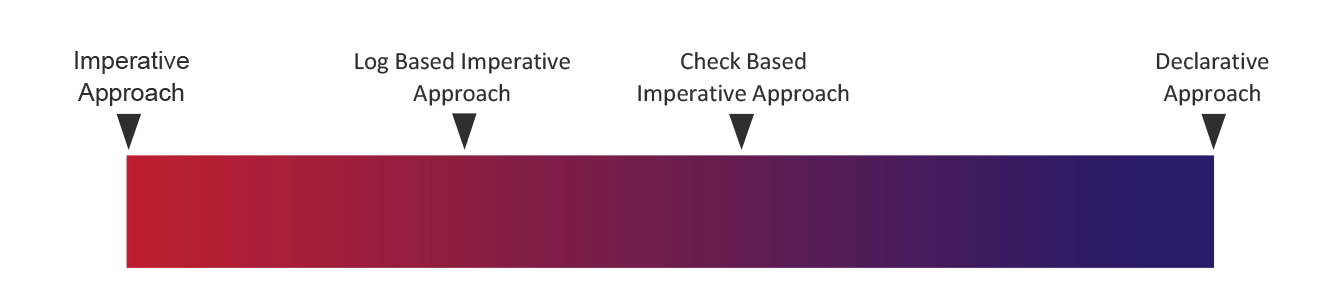

Following on from Imperative vs declarative for Data. In this blog post, we will look at different ways that the Imperative Approach can be implemented and also give an overview on how a basic Declarative Approach could work.

Pure Imperative Approach

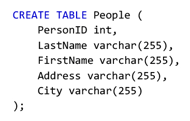

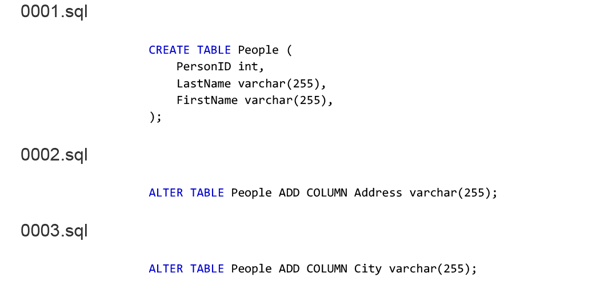

As we have seen in the previous section, a pure imperative approach really doesn’t work for databases—most SQL DDL statements require a very specific initial state e.g.

Which requires an initial state of the table not existing, or can be run at any time, but lose critical information e.g.

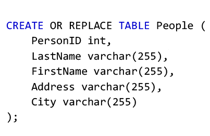

Will recreate this table with the correct structure irrespective of whether it exists or not and whatever current structure it has. However, it will remove all the data in the table.

Log based Imperative Approach

For many years this has been the most viable and practical solution to these challenges. Essentially this approach requires the admin to create each new Imperative statement in a separate step and each step is given a unique identifier. A log is kept (either in an external state store, or in the target database itself) of which steps have been applied to which Database. The engine that applies these reads a list of all the possible steps, which steps have been already applied and then applies the missing ones.

Considering a previous example this may start out like:

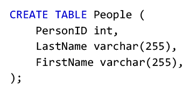

0001.sql

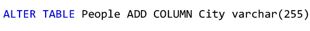

Which would be applied and create the table. Sometime later, when new columns need to be added, the follow could be created, and the next time the engine runs it detects that the highest id that has been applied is 0001 so it applies 0002 and 0003.

0002.sql

0003.sql

There are many solutions build around this fundamental premise including Snowchange, Flyway, Liquibase, Squitch, Red-Gate.

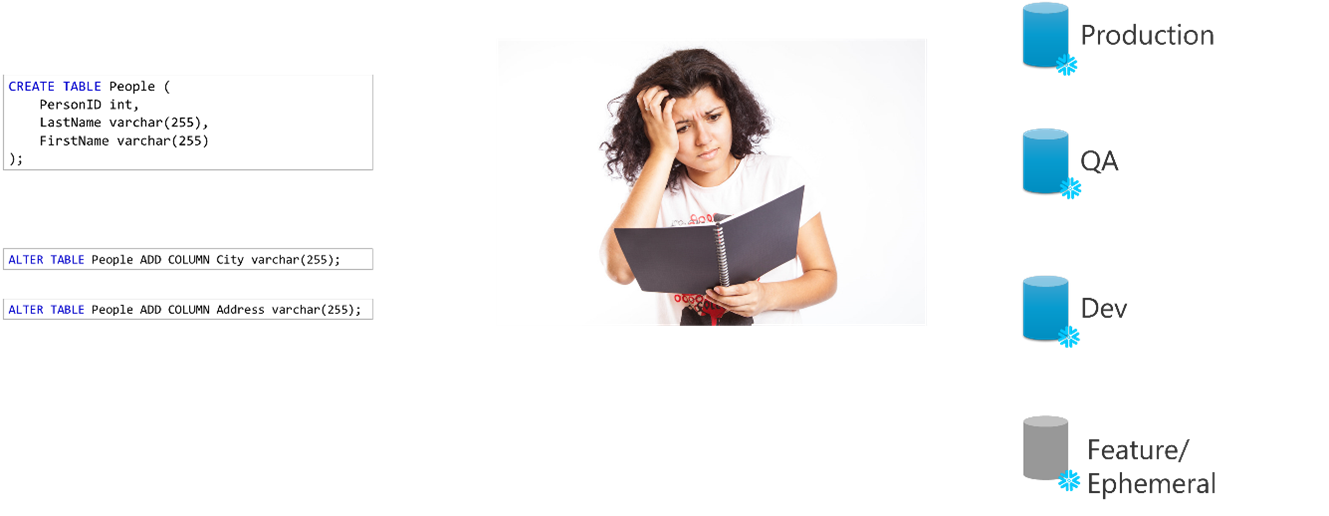

This approach is popular in the wider database community, well tested, and, in particular, it allows multiple different instances of a Database (e.g. Dev, QA, Prod) since it can keep a separate log about what has been applied for each.

However, it is based around a fundamental assumption that the Schema and Managed Objects are within a Database that:

- Cannot be touched by any other system i.e. no changes can be made by a person or another system, since this alters the state in a way the system doesn’t know about and this leads to unpredictable behaviour. While everyone would be careful not to do this in Production, it’s common for a manual change to be made in Dev and subsequent steps being added which assume this change and therefore break when applied in other environments.

- Is monotonically moving forwards e.g. there is a single, linear set of changes to the Database that always moves forwards. However, the advanced features in modern Cloud Data Platforms such as Snowflake, break this model. The concepts of Zero Copy Clone and Time Travel for Databases, Schemas, Tables etc break this assumption. Consider that all of these have been applied:

But an issue occurs with the final ALTER TABLE statement. We don’t need anything so prosaic as restoring from a backup, Snowflake Time Travel simply allows us to revert to the point in time before 0003.sql was applied and everything is recovered. However, we now have a situation where the external engine and its logs believe this statement has been applied, but the actual system itself is in a different state.

This approach is also potentially fragile—while the log based approach in theory ensures that each statement runs once and exactly once, the whole system requires each step to work correctly. If the desired state of a system is defined in a set of 500 Imperative steps, the system will only be in the correct state if each of these 500 steps execute perfectly.

In practical operation, creating these numbered/versioned files in the right order can be very hard in a branched/collaborative environment. In a simple, but very common, example, if the latest file is ‘3’, several people working on new features/changes take ‘4’. When they find our that there is a conflict and someone wants to move to ‘5’, that has been taken by someone else. Organisations that have done this successfully often end up assigning a single ‘master of the numbers’ that other people apply to. In more complex example the people working on feature B discover that feature A is already making some of the changes they need and so work on this basis, then feature A hits problems and is delayed—now feature B can’t be deployed since it’s numbered scripts depended on the numbered script in feature A.

The reality is that as Databases and Cloud Data Platforms become more and more advanced, the assumptions that allow a Log Based Imperative Approach to work have broken down and the requirement for collaborative working has made it operationally very challenging and error prone.

Check based imperative Approach

When looking at how to build robust automation, it’s very often useful to see how a human being would handle the same requirement. Before we examine the Check based imperative approach, lets consider how a real person would handle this requirement:

“here is an ALTER TABLE <table> ADD COLUMN <newcolumn>—it has been deployed to some of our environments and not to others—go and get all systems up to date”.

Would they look through all the query logs and find out of this statement had been run? No, they would be much more concerned about whether the result or effect of the query was present. They would therefore look at <table> and see if <newcolumn> existed as defined. If it wasn’t there, they would run the SQL to add it, if it was there, they’d check this off their list and move on to the next system.

The critical point here is that they are deciding whether to take an action based on whether the result of that action already exists in the system.

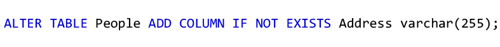

Interestingly many databases already have this concept built in for certain type of operation. I could write:

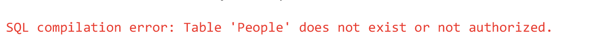

The first time I execute this it will work, but every subsequent time it will throw an error like:

However, if I write:

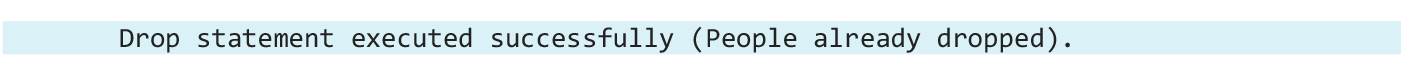

The first time it runs it will drop the table, but every subsequent time it will return a graceful message like: Drop statement executed successfully (People already dropped).

The inbuilt capabilities of the database have provided me with a way to define and action “DROP TABLE People”, but also a criteria that needs to be met to try to actually execute this action “IF EXISTS”. The database itself will run the check first and then, based on the results of that check, will decide to run the action or not. This is a Check based imperative approach—run a fundamentally imperative statement, but only if a particular check has been met.

Doesn’t this solve all our problems then? Unfortunately not, since in all databases, this functionality is only available for specific use cases.

Will check is the table exists, and if not create it, but if it does exist it will return a graceful message and exit, even if the table that exists is different to the one defined.

Is simply not a supported statement in most databases (PostgresSQL and MariaDB being notable exceptions). However there is no database that has enough “IF NOT EXISTS” functionality to support all use cases, and in some situations “IF NOT EXISTS” is not enough—for example a DDL to alter the data type of an existing column.

Let’s consider for a moment three Imperative statements and a variety of different environments, and a human who doesn’t know which ones have been applied to which Figure 3—How would a human solve this?

Figure 3—How would a human solve this?

How would this person go about working out which of these need to be run and on which environments? Would they go a look in the history log for queries (effectively emulating the Log Based Imperative Approach?). Almost certainly not because:

- This is a time consuming process

- Just because a statement ran at some point in history, it doesn’t mean that the result of that statement is still in effect. If an ADD COLUMN was found in the history, they would have to scan the entire history since then to confirm that no other statements had DROPPED or ALTERED this column since

- Check if People table exists. If not run the CREATE TABLE.

- Check if People table has the City column. If not, run the ALTER… ADD COLUMN City.

- Check if People table has the Address column. If not, run the ALTER… ADD COLUMN Address.

In a more abstract sense, they are “checking the resultant state that would result from a statement being executed and if that state doesn’t exist, they would run the statement”.

Therefore, to be really useful and powerful, we would need a way of conditionally executing ANY DDL statements based on an arbitrary, flexible logical check with complete access to the target database. For example:

Check Statement: SELECT count(*) FROM INFORMATION_SCHEMA.COLUMNS WHERE TABLE_NAME = 'People' AND COLUMN_NAME = 'Address'

Check Expected Result: 1

Statement: ALTER TABLE People ADD COLUMN IF NOT EXISTS Address varchar(255);

This is discussed in the paper An implementation approach to Check Based Imperative Approach to Schema Management.

Declarative Approach

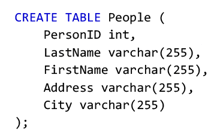

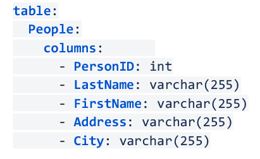

The Declarative Approach has already been well defined. We start with a highly declarative definition like:

Apply a declarative compiler as per Figure 2 which performs the following 7:

- Create, parse and validate input Declarative Definition and create the desired state

- Gather current state from the target system

- Compare the desired state and current state and create a set of differences

- Determine the order in which differences need to be applied (e.g. you can’t GRANT a ROLE to a USER until both the USER and ROLE exist)

- Turn this ordered set of diffs into a set of Imperative Steps

- Execute the Imperative Steps against the target system

- Handle any failures

Conclusion

As discussed above these two ‘pure’ approaches are points on a spectrum, with the Log Based and Check Based Imperative approaches being points on that spectrum.

What does this mean today? For the DataOps for Snowflake platform we believe that a full Declarative Approach is the long-term approach for the whole industry. When looking at how other technologies areas (e.g. infrastructure, networking etc) have progressed, these have all followed an inexorable march from Imperative to Declarative over time, but this has taken some time to mature. We do not believe the technologies are quite there today, and we are actively working on projects to make this a reality. However, we believe all use cases today are possible using a set of approaches:

- Use “CREATE OR REPLACE X” where possible (i.e. the target object is stateless, or the state doesn’t matter)

- Use “CREATE X IF NOT EXISTS” where possible

- Use the conditional execute approach (and possibly some convenience wrappers to make specific high frequency use cases even easier)

In the future a Declarative Approach will be a natural evolution of this.

In the next series of blog posts we will look specifically at an implementation approach to Check Based Imperative Approach to Schema Management.

By

DataOps.live

By

DataOps.live