This is the first in a series of blog posts discussing automating the lifecycle events (e.g. creation, alteration, deletion) of database objects which is critical in achieving repeatable DataOps processes. Rather than the database itself being the source of truth for the data structure and models, instead the code within the central repository should define these.

However, applying changes (creations, alterations, deletions) to database objects requires the use of SQL, which is an imperative language. This means that a sequence of operations needs to be executed in a certain order from a known database state in order to reach the target configuration.

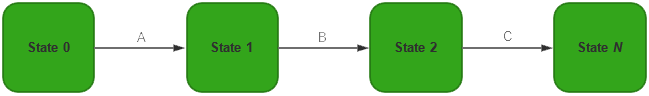

In the diagram above, the target is State N, starting from State 0. Operations A, B and C are applied in sequence, transiting through states 1 and 2 in order to reach the target state.

Take the following example:

CREATE DATABASE ...

USE DATABASE ...

CREATE SCHEMA ...

USE SCHEMA ...

CREATE TABLE ...

CREATE ROLE ...

GRANT ROLE ... TO TABLE ...

The above code expects the starting state to be a certain way, i.e. no database, schema, table etc. But if the database, for example, was to already exist when the code was run, there would be an error.

In general, imperative procedures suffer from a number of limitations and drawbacks, including:

- The starting point must be well defined and known in advance, otherwise changes may fail (e.g. if an object to be altered does not exist).

- Some changes must be executed in sequence (e.g. applying a grant to a table after it has been created), leading to complex serial/parallel branching processes (DAGs).

- A failure part-way through an imperative process can leave the database in an unknown state.

- The process author will need to be familiar with the low-level database commands and operations, including limiting factors such as execution times and potential race conditions.

Ideally, it would be possible to apply changes to a database in an idempotent way. This means that a sequence of operations is still applied, but it is insensitive to the initial state, and could therefore be applied one or more times with the same successful effect. If idempotent code could be used, a DataOps process would not need to know the current state of the database, worry about when the process was last executed (or what the result of the last execution was), or care if external processes have updated the database, as the result should always be the same.

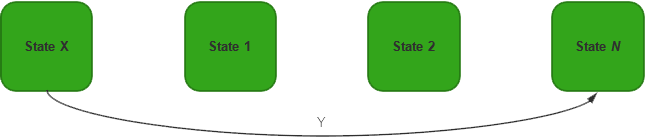

In the diagram above, an idempotent approach can move the database from State X , where X is an unknown state that could be state 0, 1 or 2, to the target State N.

The next two blog posts will examine different DDL operations used in SQL-based relational databases for the ability to be executed in an idempotent way, particularly in the context of common DataOps use cases, highlighting key exceptions and methods for handling them. Snowflake has been chosen as a popular cloud-based database platform, commonly used in DataOps applications.