Power lives in the Cloud

If you are here and reading this article, we don’t have to tell you about the benefits Snowflake Data Cloud brings. We can only expect Snowflake to play an even more significant role with the growing adoption of AI and ML and with data getting significantly more critical but also compute power consumption growth to teach the models.

I was very impressed when I could go to the AWS cloud and teach my test ML model via SageMaker, spinning up the virtual machine with compute, thinking it probably couldn’t improve. However, I was positively surprised that you could combine that with the ease and familiarity of SQL. With Snowflake, you can work with your data a lot easier without losing any benefits of flexible compute, and with Snowpark quickly switching between SQL and Python, with virtually unlimited compute power to teach your models right where data lives.

With power comes responsibility

However, that power comes with the cost. No matter what platform you use. I love this hilarious example by Tomáš Sobotík at Snowflake Summit 2023, where he says that one poor unobserved query can make the warehouse run for two days and result in an unexpected cost spike, perhaps for some even a significant budget spend.

At that point, I feel lucky that I started my IT career as Oracle SQL developer years ago at an on-prem database. A bit awkward to admit, but it was common that some of my practice queries could run for a very long time on reporting WH. Nowadays, those queries would’ve cost more than my salary at the time.

Making sure you don’t run out of budget because a bunch of curious young developers are tinkering with your data is one thing. Maybe you are lucky and have a Snowflake Superhero who could recommend you setting some query or warehouse timeouts or spend limits. Luckily, common Snowflake consumption pitfalls are well known. If you are curious about some of those, check out the Snowflake Summit 2023 session mentioned above or our recent recording that we did with our partner XponentL data on Optimizing Snowflake Spend.

But nowadays, being defensive in your data strategy is not good enough. You’d want to run cost-efficient/economically viable data processes, and we believe that the key to that is observability and automation.

Transparency creates opportunity

Almost anything in any space starts with people and governance. Assigning the right people with the proper responsibility is, I dare to say, the most crucial step in achieving results. It wasn’t an exception in our case, either. We’ve decided to start managing our Snowflake spend to get the most out of our many Snowflake accounts and assigned Martin Getov, our engineering team lead, to work on our Snowflake organization and account costs.

What comes next? Should Martin start looking at more than 2000 warehouses across our many accounts? Well—yes and no. Martin should have access to essential metrics relevant to his role, but the investigation should be targeted and automated. We believe in the importance of Observability, recently announced by Thomas Steinborn, DataOps.live SVP of Product.

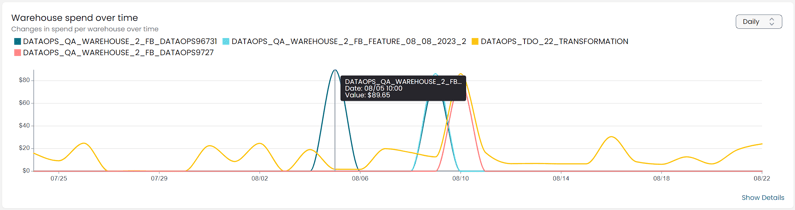

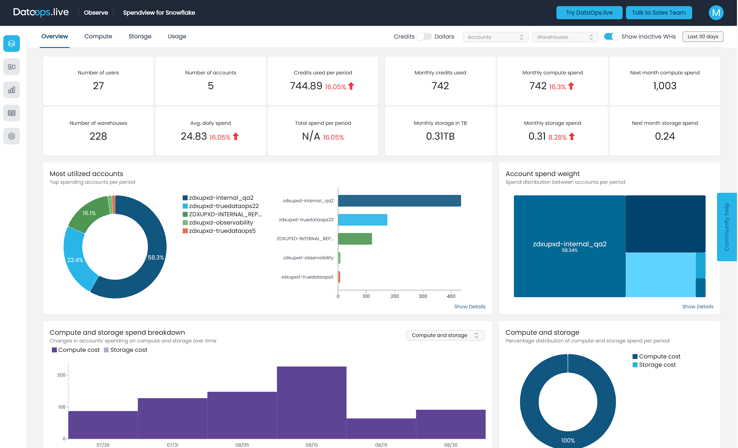

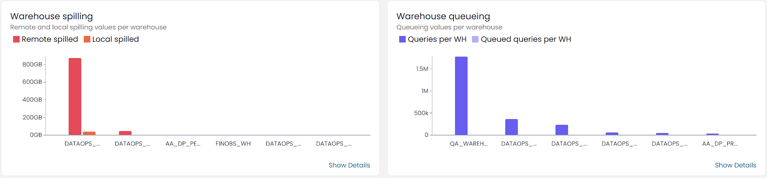

Considering the current challenging market situation, we at DataOps.live, wanted to support our Snowflake Data Community, and released a free Spendview tool that helps to get an overview of your Snowflake spend and usage. With Spendview, Martin could quickly get an overview of all accounts and warehouses and recognize improvement opportunities. In the first month, we managed to identify 25% worth of wasted compute spend thanks to poorly chosen auto-suspend periods and re-invest that wasted compute into improving the Snowflake user experience by eliminating warehouse spilling in important warehouses.

If you are curious how we used warehouse utilization, spilling, and queuing charts, please read Martin’s findings in our Spendview Best Practices section at DataOps.live community.

Spendview presents a Snowflake infrastructure big picture and gives control to business leaders within the data team to make data-driven decisions about their investments in Snowflake infrastructure. It is best paired with either a Snowflake expert (in our case Martin) or Snowflake optimization tools like one that our good partners at XponentL data provide—Snoptimizer. Please check out our recent webinar on how different optimization tools can collaborate to help achieve the best outcomes of Snowflake Data Cloud.

With that, we hope you found valuable advice in our blog, and we invite you to try Free Spendview for Snowflake. Please share your findings with like-minded people at DataOps.live community, where we would happily support you with automation and observability best practices and advice!

By

Jevgenijs Jelistratovs - Director of Governance Products & Partner Success, at DataOps.live

By

Jevgenijs Jelistratovs - Director of Governance Products & Partner Success, at DataOps.live